It appears that the javascript getServerUrl() function will become deprecated with the release of Update Rollup 12 for CRM Dynamics 2011 (imminent). As far as I can tell, from that point forward there will be a new function called getClientUrl() that should be used instead.

No doubt the reason for the deprecation is due to the fact that the getServerUrl() function cannot always be relied upon to return the correct server context as described in an earlier post (and therefore the workaround solution described in that post should no longer be necessary).

We'll have to get back to this to confirm once the rollup has been officially released.

The intention of this blog is to focus on the business application of Microsoft CRM and its surrounding ecosystem. In doing so, whenever discussing a topic I will endeavor to avoid presenting dry facts but rather to relate it to the practical application and/or impact it might have on the business, the pros, cons, best practices etc. The correct way of thinking is paramount when confronting a business challenge and this is what I hope to bring to the table.

Wednesday, December 26, 2012

Form Query String Parameter Tool

The Dynamics team recently released a new utility called the Form Query String Parameter Tool. Essentially it's a useful little utility that can be used to generate the syntax of a create form URL passing in parameters to default field values when the new form is opened.

Having said that, I believe that in in most cases using the URL parameter passing approach for defaulting values on the create form should not be necessary. The rest of this post will explain this point of view.

First of all, the out of the box "field mapping" approach - whereby field values from the currently open parent entity are mapped to the child entity form being opened - should be used where ever possible. This covers scenarios where the default values are static regardless of the form "context" and the field being mapped is in fact "mappable".

If the default values are dependent on the form "context" (e.g. new accounts where type = "customer" should have different defaults than cases where type = "vendor") or if the field being mapped is not "mappable" then your next best bet is using javascript on the form load event to set the defaults. Using javascript you can set all the appropriate fields as long as you have a field populated on the form in order to make the necessary branching default logic (the form "context" field). The form context field may be set using either standard field mapping, a web service API call (as explain below) or via URL parameters. Once obtained the javascript default logic can take over.

Using the URL parameters approach would therefore only seem to be necessary when you don't have a form "context" field on the form that is being opened. However this is not necessarily the case either since you can also leverage the web service calls (RESTful or SOAP) in order to retrieve the "context" from the parent field in order to perform the necessary branch logic.

Therefore it would seem that using form parameters to set default values would be necessary to only a fairly limited set of scenarios. This would be a scenario where all 3 conditions listed below are true:

Finally, even in the remaining few scenarios that do require the URL mapping approach, it is really only necessary to pass through a single URL parameter - that will set the context and javascript can subsequently take over for the remaining default logic.

Therefore, when all is said and done, while it's always nice to see new tools being developed to facilitate the customization effort, I do not see myself using this particular one all too frequently.

Perhaps there are scenarios that I'm not considering? If I encounter them I'll be sure to dish.

One scenario encountered is with differentiating between multiple 1:N field maps for the same two entities as described in this post.

Having said that, I believe that in in most cases using the URL parameter passing approach for defaulting values on the create form should not be necessary. The rest of this post will explain this point of view.

First of all, the out of the box "field mapping" approach - whereby field values from the currently open parent entity are mapped to the child entity form being opened - should be used where ever possible. This covers scenarios where the default values are static regardless of the form "context" and the field being mapped is in fact "mappable".

If the default values are dependent on the form "context" (e.g. new accounts where type = "customer" should have different defaults than cases where type = "vendor") or if the field being mapped is not "mappable" then your next best bet is using javascript on the form load event to set the defaults. Using javascript you can set all the appropriate fields as long as you have a field populated on the form in order to make the necessary branching default logic (the form "context" field). The form context field may be set using either standard field mapping, a web service API call (as explain below) or via URL parameters. Once obtained the javascript default logic can take over.

Using the URL parameters approach would therefore only seem to be necessary when you don't have a form "context" field on the form that is being opened. However this is not necessarily the case either since you can also leverage the web service calls (RESTful or SOAP) in order to retrieve the "context" from the parent field in order to perform the necessary branch logic.

Therefore it would seem that using form parameters to set default values would be necessary to only a fairly limited set of scenarios. This would be a scenario where all 3 conditions listed below are true:

- The fields cannot be defaulted via standard parent/child field mapping

- The form does not contain a form "context" field on which to base javascript branching logic

- A standalone form that is not linked to another form from which the "context" can be retrieved using web services

Finally, even in the remaining few scenarios that do require the URL mapping approach, it is really only necessary to pass through a single URL parameter - that will set the context and javascript can subsequently take over for the remaining default logic.

Therefore, when all is said and done, while it's always nice to see new tools being developed to facilitate the customization effort, I do not see myself using this particular one all too frequently.

Perhaps there are scenarios that I'm not considering? If I encounter them I'll be sure to dish.

One scenario encountered is with differentiating between multiple 1:N field maps for the same two entities as described in this post.

Thursday, December 20, 2012

Simplifying Navigation: Add to Favorites

This post is another in the series on Simplifying Navigation - it is somewhat of an "oldie" but definitely a "goodie". And I have also verified that the same issue and workaround exists using Outlook 2013.

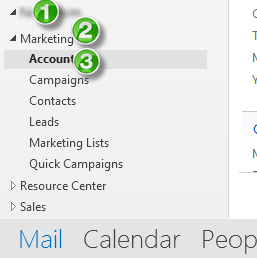

In short, the issue is that the CRM area within the Outlook Client is somewhat buried. For example, if you wish to navigate to the Accounts, it will involve at least 3 clicks (if this area was not previously opened):

In addition to that, if you are more than likely to access a particular entity from your CRM data it would be helpful if you could separate it from the rest of the group.

And fortunately you can by simply dragging and dropping the CRM folder to your Outlook "Favorites" folder which will you give single click access to your CRM data. Much better.

As an aside, it appears that in Outlook 2013 the UI has been simplified and the icons for all the folders have been removed... including the CRM icons. Not sure if this is a long term feature or something that will be tweaked in future Office patches (I personally haven't yet decided whether I prefer it this way or not).

The issue that you may encounter is that the aforementioned drag and drop capability might not be working. And if that is the case, you need to enable this feature by running through the steps in this KB article. Or you can just download this file, unzip and double click to deploy it in your environment (this will add a single registry setting called DisabledSolutionsModule under your MSCRMClient key).

Another option that allows for a little more organization of your CRM links is to use the Outlook "shortcuts" option. In order to make this effective the first thing you should do is go to the Navigation Options and move the shortcuts up in the list so it appears in plain sight as shown.

You can then add new shortcuts to the CRM folders of your preference:

And now you can click on the shortcut to navigate to your CRM data:

In short, the issue is that the CRM area within the Outlook Client is somewhat buried. For example, if you wish to navigate to the Accounts, it will involve at least 3 clicks (if this area was not previously opened):

In addition to that, if you are more than likely to access a particular entity from your CRM data it would be helpful if you could separate it from the rest of the group.

And fortunately you can by simply dragging and dropping the CRM folder to your Outlook "Favorites" folder which will you give single click access to your CRM data. Much better.

The issue that you may encounter is that the aforementioned drag and drop capability might not be working. And if that is the case, you need to enable this feature by running through the steps in this KB article. Or you can just download this file, unzip and double click to deploy it in your environment (this will add a single registry setting called DisabledSolutionsModule under your MSCRMClient key).

Another option that allows for a little more organization of your CRM links is to use the Outlook "shortcuts" option. In order to make this effective the first thing you should do is go to the Navigation Options and move the shortcuts up in the list so it appears in plain sight as shown.

You can then add new shortcuts to the CRM folders of your preference:

And now you can click on the shortcut to navigate to your CRM data:

Thursday, December 6, 2012

Data Import Options

When discussing data imports to CRM there are 2 distinct scenarios:

Data Migration

Data Migration generally refers to a large scale conversion effort and is typically performed as part of a CRM Go Live effort where data is migrated from the legacy system and is usually quite an involved effort. This exercise is technical in nature, typically performed by IT professionals and requires a great attention to detail. Correspondingly the migration logic is also usually quite involved. Meaning that there can be quite a bit of data transformation as part of the migration exercise. And if migrating from another system, you typically want to be able to connect to the data in the legacy database rather than extracting the data to an intermediate CSV file. So as a general rule of thumb when doing this type of data migration you typically want to be using a tool like Scribe to make it happen.

Importing Data

Importing Data generally refers to a more specific one-time or ongoing requirement to import data into a live CRM environment. For example, the need to import new leads into the CRM database. Typically this is a more straight-forward "one-to-one" exercise (i.e. no data transformation required) and if this is the case, the out of the box "Data Import Wizard" can be used for this function.

One thing to note is that while the "Data Import Wizard" has advanced by leaps and bounds, it still has one chief limitation - it only can import new records. If you're looking to use the wizard to update existing records, I'm afraid you're fresh out of luck. And if that is a requirement you'll once again need to look for a 3rd party tool such as Scribe.

For an excellent general overview of the features and functions of the Data Import Wizard please refer to this post. At the end of the post it lists a number of 3rd party tools that can be used to fill the gap in functionality of the Data Import Wizard should you encounter it. Although none of these products have what I would term a "no-brainer" price. Below is a rough comparison in pricing of the various tools on the market (as of this writing):

The following tools do not seem to fulfill the requirement:

Data Migration

Data Migration generally refers to a large scale conversion effort and is typically performed as part of a CRM Go Live effort where data is migrated from the legacy system and is usually quite an involved effort. This exercise is technical in nature, typically performed by IT professionals and requires a great attention to detail. Correspondingly the migration logic is also usually quite involved. Meaning that there can be quite a bit of data transformation as part of the migration exercise. And if migrating from another system, you typically want to be able to connect to the data in the legacy database rather than extracting the data to an intermediate CSV file. So as a general rule of thumb when doing this type of data migration you typically want to be using a tool like Scribe to make it happen.

Importing Data

Importing Data generally refers to a more specific one-time or ongoing requirement to import data into a live CRM environment. For example, the need to import new leads into the CRM database. Typically this is a more straight-forward "one-to-one" exercise (i.e. no data transformation required) and if this is the case, the out of the box "Data Import Wizard" can be used for this function.

One thing to note is that while the "Data Import Wizard" has advanced by leaps and bounds, it still has one chief limitation - it only can import new records. If you're looking to use the wizard to update existing records, I'm afraid you're fresh out of luck. And if that is a requirement you'll once again need to look for a 3rd party tool such as Scribe.

For an excellent general overview of the features and functions of the Data Import Wizard please refer to this post. At the end of the post it lists a number of 3rd party tools that can be used to fill the gap in functionality of the Data Import Wizard should you encounter it. Although none of these products have what I would term a "no-brainer" price. Below is a rough comparison in pricing of the various tools on the market (as of this writing):

- Scribe:

- $3000: 15 user license

- $5500: 100 user license

- $1900: 60 day migration license

- Inaport:

- $1799: standard (should be sufficient for import function)

- $3499: professional

- $1195: 30 day migration "professional" version which is apples to apples comparison for the Scribe migrate license)

- Import Manager (best option if end user interface is required)

- ~$2500

- Import Tool (attractive pricing)

- ~$1300: Full Version

- ~$325: 60 day migration license

The following tools don't seem to be realistic candidates based on their price point:

- Starfish

- $4800/year: Basic Integration

- $1495: 60 day migration license

- eOne SmartConnect

- $4500 (not sure if this is a one time or per year fee)

- QuickBix

- $7000: 100 user license ($70/user)

- Jitterbit

- $800/month: Standard Edition

- $2000/month: Professional Edition

- $4000/month: Enterprise Edition

The following tools do not seem to fulfill the requirement:

- CRM Migrate

- Tool specifically built for migrating SalesForce to CRM

- CRM Sync:

- Very little information and no pricing for this tool

Tuesday, November 6, 2012

object doesn't support property or method '$2b'

We encountered this strange error in a CRM online environment. The symptoms were as follows:

- Only occurred on the Outlook client (not in IE)

- Occurred even when form jscript was disabled indicating issue was not with the script

- Appeared when closing out the contact form

The error message looked as follows:

After a bit of scratching around the solution presented in the following forum discussion seemed to work. Or in short as follows:

- Close Outlook and IE

- Open IE and delete temporary files (Make sure to uncheck “preserve favorites website data”)

- Now if you open outlook, you might see that On the Grid's Ribbon is loading... WAIT for it to load

- Open the Account, Lead, Appointment

Cannot add more picklist or bit fields

I came across this issue today in a 4.0 environment that still uses SQL 2005. Time to upgrade, huh? Happily that's soon to be the case - so this hopefully is just for the record books.

Anyway the symptom was that while the user was able to add nvarchar fields to the accounts entity, it would error out each time the user wanted to add a new bit field (or for that matter a picklist) it would fail with an error message.

Using the trace tool it took pretty quick to identify the underlying cause. The following exception appeared in the trace file:

So what is causing the "too many table names" in query?

Simple. Every time an attribute is added to an entity, the entity views (regular entity view and filtered view) are updated. The filtered view in particular joins to the StringMap view for picklists and bit fields to obtain the corresponding friendly "name" field. For example, for an account field called "new_flag" it will join to StringMap view to create a new virtual field in the FilteredAccount called "new_flagname".

One only needed to look up a little higher in the trace file to see the view being constructed with many joins for the bit and picklist fields. Such that if the number of these two types of field combined exceeds 256 (or thereabouts given other joins that may already exist) it will cause this join limitation to occur. Which is only a limitation on SQL 2005. This is no longer a limitation from SQL 2008 and up.

The options for resolving this issue are therefore:

Anyway the symptom was that while the user was able to add nvarchar fields to the accounts entity, it would error out each time the user wanted to add a new bit field (or for that matter a picklist) it would fail with an error message.

Using the trace tool it took pretty quick to identify the underlying cause. The following exception appeared in the trace file:

Exception: System.Data.SqlClient.SqlException: Too many table names in the query. The maximum allowable is 256.

So what is causing the "too many table names" in query?

Simple. Every time an attribute is added to an entity, the entity views (regular entity view and filtered view) are updated. The filtered view in particular joins to the StringMap view for picklists and bit fields to obtain the corresponding friendly "name" field. For example, for an account field called "new_flag" it will join to StringMap view to create a new virtual field in the FilteredAccount called "new_flagname".

One only needed to look up a little higher in the trace file to see the view being constructed with many joins for the bit and picklist fields. Such that if the number of these two types of field combined exceeds 256 (or thereabouts given other joins that may already exist) it will cause this join limitation to occur. Which is only a limitation on SQL 2005. This is no longer a limitation from SQL 2008 and up.

The options for resolving this issue are therefore:

- Upgrade. Really. The technology you are using is around 7 years old (at least) and there does come a point where the compelling reason to upgrade is just the combined benefits of all the various enhancements that have been introduced over time (that is, if you cannot find a single compelling reason).

- Clean up your system and review whether you actually do need all those fields in your environment. This actually is relevant whether you upgrade or not. I'm a big proponent of keeping the environment as clean as possible as my first post on this blog will attest to (disclosure: the above environment is extended directly by the client as we like to encourage our clients to do so they are not reliant on us for every little change required).

Friday, November 2, 2012

Passing Execution Context to Onchange Events

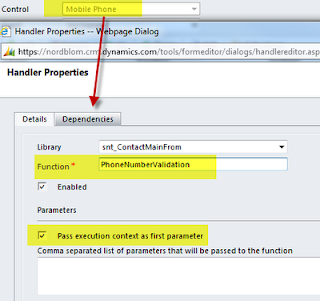

In a previous posting I provided some jscript that can be used to validate phone number formats. In order to invoke the validation for a phone number field I mentioned that you need to create an "on change" event that would pass in the attribute name and attribute description i.e.:

I thought this would provide a good example for demonstrating the ability to use the execution context because you can obtain the field name and label (by extension) via the execution context which on the surface is a good thing since you avoid hard-coding as in the example above (and you can apply this to all phone number fields in the system). And therefore in theory you could simplify that example as follows:

The only difference is that instead of the "phone" and "phoneDesc" parameters being passed into the validation function, the execution context is instead passed in and the phone attribute and its corresponding phoneDesc label are obtained via the context as local variables. The rest stays the same.

In order for this to work, you would update the "on change" event to call the PhoneNumberValidation function directly and check off the "pass execution context as first parameter" as shown:

function Attribute_OnChange() {

PhoneNumberValidation("attributeName", "attributeDescription");

}

I thought this would provide a good example for demonstrating the ability to use the execution context because you can obtain the field name and label (by extension) via the execution context which on the surface is a good thing since you avoid hard-coding as in the example above (and you can apply this to all phone number fields in the system). And therefore in theory you could simplify that example as follows:

function PhoneNumberValidation(context) {

var phone = context.getEventSource().getName();

var phoneDesc = Xrm.Page.getControl(context.getEventSource().getName()).getLabel();

var ret = true;

var phone1 = Xrm.Page.getAttribute(phone).getValue();

var phone2 = phone1;

if (phone1 == null)

return true;

// First trim the phone number

var stripPhone = phone1.replace(/[^0-9]/g, '');

if ( stripPhone.length < 10 ) {

alert("The " + phoneDesc + " you entered must be at 10 digits. Please correct the entry.");

Xrm.Page.ui.controls.get(phone).setFocus();

ret = false;

} else {

if (stripPhone.length == 10) {

phone2 = "(" + stripPhone.substring(0,3) + ") " + stripPhone.substring(3,6) + "-" + stripPhone.substring(6,10);

} else {

phone2 = stripPhone;

}

}

Xrm.Page.getAttribute(phone).setValue(phone2);

return ret;

}

The only difference is that instead of the "phone" and "phoneDesc" parameters being passed into the validation function, the execution context is instead passed in and the phone attribute and its corresponding phoneDesc label are obtained via the context as local variables. The rest stays the same.

In order for this to work, you would update the "on change" event to call the PhoneNumberValidation function directly and check off the "pass execution context as first parameter" as shown:

So that's the theory and I think it demonstrates quite nicely how the execution context can be used.

Having said that, in this particular example, I prefer using the explicit technique referenced in the original posting. The reason for this is because the on change event in this validation example (and probably relevant for most data validation cases) has a dual function -

The first is to provide the necessary validation as part of the field on change event as the example above will accomplish quite well.

The second is to be called from the on save event to make sure that even if users ignore the message from the on change event they will not be able to save the form via the validation from the on save event (the PhoneNumberValidation function returns a true or false value to indicate whether validation was passed or not). And when the function is called from the on change event the specific field context is not going to be there anyway making it necessary to put in some additional logic in order to handle correctly. Therefore what you gain from using the execution context in this example is likely to be offset by requirements for special handling required by the on save event.

Thursday, November 1, 2012

Dynamics CRM Connectivity and Firewall Requirements

The Dynamics CRM team released a new white paper providing guidance for the connectivity requirements for typical Dynamics CRM on premise installations. It covers both regular on premise and IFD scenarios.

I don't think there's any earth shattering revelations made in this white paper and given that it is choc-full of riveting port settings requirements it is a real page turner (thankfully relatively short at 12 pages). All kidding aside this document is a good reference to file somewhere in your brain that you can pull out especially for those cases where the IT department has very rigid controls over their internal IT infrastructure.

I thought the following diagram (page 6) to be especially helpful as a visual guide although I'm not sure the dotted line between the client machine and the SQL Server is entirely accurate. I would think that it's necessary for all reporting requirements and not just for the Excel export function. That's unless you're not planning on having any custom reports or that all report development will be performed via RDP on the SQL server. Either way, I think it is unlikely in most installations for this to be a "dotted" line.

Other than that I also think the table on the following page provides a good summary that helps explain the overall picture to system administrators unfamiliar with the Dynamics CRM deployment footprint.

I don't think there's any earth shattering revelations made in this white paper and given that it is choc-full of riveting port settings requirements it is a real page turner (thankfully relatively short at 12 pages). All kidding aside this document is a good reference to file somewhere in your brain that you can pull out especially for those cases where the IT department has very rigid controls over their internal IT infrastructure.

I thought the following diagram (page 6) to be especially helpful as a visual guide although I'm not sure the dotted line between the client machine and the SQL Server is entirely accurate. I would think that it's necessary for all reporting requirements and not just for the Excel export function. That's unless you're not planning on having any custom reports or that all report development will be performed via RDP on the SQL server. Either way, I think it is unlikely in most installations for this to be a "dotted" line.

Other than that I also think the table on the following page provides a good summary that helps explain the overall picture to system administrators unfamiliar with the Dynamics CRM deployment footprint.

The white paper can be downloaded from here.

Monday, October 29, 2012

JSON vs. Ajax vs. jQuery - layman's guide

JSON, Ajax, and jQuery are all technologies that are frequently referenced and implemented to provide a great deal of flexibility and data manipulation options when customizing Dynamics CRM - in particular as it relates to Jscript customization. This post is therefore dedicated to providing a very brief layman's guide to each of these technologies and what the essential difference and function of each is.

JSON

JSON is simply a data format much like XML, CSV, etc. Its primary function is to provide an alternative to the XML standard. For example (borrowed from Wikipedia), an XML format for a "person" entity might be as follows:

Whereas the JSON equivalent would be as follows:

{

The benefit cited for JSON over the XML standard is that JSON is generally considered to be lighter weight and easier to process programmatically while maintaining all the other "aesthetic" benefits of the XML standard. For a more detailed analysis of these benefits, refer to the JSON web site.

Ajax

Ajax is used for asynchronous processing of web pages. Meaning that once the web page has been loaded Ajax can be used to interact with the server without interfering with the already rendered web page i.e. such requests happen as part of background processing. Ajax is therefore typically used to make web pages highly interactive without having to reload the web page every time a new server request is made to retrieve data based on user interaction. A classic example is when you start typing an airport name at one of the online reservation sites and the drop down list shows relevant options based on what you are entering.

Ajax interacts with the server by means of an XMLHttpRequest object. And although the results that are retrieved can be in XML format (as is indicated by the object name) it is typically more common to retrieve the results in JSON format as that is easily consumed by JScript.

jQuery

jQuery is meant to simplify JScript programming by making it easier to to navigate, handle events, animate, and develop Ajax for web pages. The latter being most relevant for this summary. And therefore as far as

Ajax is concerned - jQuery leverages Ajax for performing server requests and simplifies interaction with the Ajax layer. Put simply, Ajax is the tool that jQuery will use for handling asynchronous server requests. So when you see the "$.ajax" method in your JScript code it means that jQuery ($.) is being used to execute an Ajax request (ajax).

At this point in time, only the jQuery ajax method is supported by Dynamics CRM. Or to quote from the SDK:

JSON

JSON is simply a data format much like XML, CSV, etc. Its primary function is to provide an alternative to the XML standard. For example (borrowed from Wikipedia), an XML format for a "person" entity might be as follows:

<person> <firstName>John</firstName> <lastName>Smith</lastName> <age>25</age> <address> <streetAddress>21 2nd Street</streetAddress> <city>New York</city> <state>NY</state> <postalCode>10021</postalCode> </address> <phoneNumbers> <phoneNumber type="home">212 555-1234</phoneNumber> <phoneNumber type="fax">646 555-4567</phoneNumber> </phoneNumbers> </person>

Whereas the JSON equivalent would be as follows:

{

"firstName": "John", "lastName": "Smith", "age": 25, "address": { "streetAddress": "21 2nd Street", "city": "New York", "state": "NY", "postalCode": "10021" }, "phoneNumber": [ { "type": "home", "number": "212 555-1234" }, { "type": "fax", "number": "646 555-4567" } ] }

The benefit cited for JSON over the XML standard is that JSON is generally considered to be lighter weight and easier to process programmatically while maintaining all the other "aesthetic" benefits of the XML standard. For a more detailed analysis of these benefits, refer to the JSON web site.

Ajax

Ajax is used for asynchronous processing of web pages. Meaning that once the web page has been loaded Ajax can be used to interact with the server without interfering with the already rendered web page i.e. such requests happen as part of background processing. Ajax is therefore typically used to make web pages highly interactive without having to reload the web page every time a new server request is made to retrieve data based on user interaction. A classic example is when you start typing an airport name at one of the online reservation sites and the drop down list shows relevant options based on what you are entering.

Ajax interacts with the server by means of an XMLHttpRequest object. And although the results that are retrieved can be in XML format (as is indicated by the object name) it is typically more common to retrieve the results in JSON format as that is easily consumed by JScript.

jQuery

jQuery is meant to simplify JScript programming by making it easier to to navigate, handle events, animate, and develop Ajax for web pages. The latter being most relevant for this summary. And therefore as far as

Ajax is concerned - jQuery leverages Ajax for performing server requests and simplifies interaction with the Ajax layer. Put simply, Ajax is the tool that jQuery will use for handling asynchronous server requests. So when you see the "$.ajax" method in your JScript code it means that jQuery ($.) is being used to execute an Ajax request (ajax).

At this point in time, only the jQuery ajax method is supported by Dynamics CRM. Or to quote from the SDK:

The only supported use of jQuery in the Microsoft Dynamics CRM 2011 and Microsoft Dynamics CRM Online web application is to use the jQuery.ajax method to retrieve data from the REST endpoint. Using jQuery to modify Microsoft Dynamics CRM 2011 application pages or forms is not supported. You may use jQuery within your own HTML web resource pages. |

Thursday, October 25, 2012

Dynamics CRM 2011 Instance Adapter Released

Yesterday the Dynamics CRM team announced the release of the Microsoft Dynamics CRM 2011 Instance Adapter. The following is the description that was provided for this tool:

Interesting.

This would seemingly provide a solution to a situation that is encountered all too frequently. Namely that the data in the test or staging environment becomes stale and consequently does not provide a good testing ground without performing a refresh. Until now, the only way to do that has been to backup the production database and then import it to refresh the test environment. Something that can take a while to accomplish especially with the larger CRM databases out there. Not to mention that such a refresh would also overwrite development deltas made in those environments that would need to be re-applied. Now it would seem that you can keep the test or staging environment in sync in a much more seamless fashion.

In addition to this, this tool also would seem to offer a good fail over solution for the CRM deployment. That is, by keeping a redundant CRM deployment (on site or in a remote location) that is kept in sync via the Instance Adapter, you now have the option of switching over to this redundant server as part of a disaster recovery plan. This is a feature that certain business (typically financial institutions) require (which seems particularly relevant right now as the East Coast braces for the onslaught of Hurricane Sandy).

What is not yet clear to me is how it will handle the following scenarios:

I am quite sure that this tool will not have the robustness of dedicated disaster recovery products such as Double-Take (e.g. High Availability options). Or at least initially... But it definitely seems to be a tool that can be leveraged for optimizing test environments and perhaps to satisfy low to medium-end disaster recovery requirements without having to resort to a 3rd party (restoring a database is considered a very low end disaster recovery plan).

Time of course will tell as we learn more about this tool. To get a jump start on the installation and configuration of this tool you can review the detailed instructions provided in this PowerObjects blog posting.

This is an additional adapter for use with Connector for Microsoft Dynamics that allows for the synchronization of data between two Microsoft Dynamics CRM 2011 organizations. The adapter allows for communication between two endpoints that exist on any authentication/hosting environment (on-premise, online, IFD, etc.) This means that you can leverage this new adapter to move Microsoft Dynamics CRM 2011 data between test and production servers or from on-premises to the cloud.

Interesting.

This would seemingly provide a solution to a situation that is encountered all too frequently. Namely that the data in the test or staging environment becomes stale and consequently does not provide a good testing ground without performing a refresh. Until now, the only way to do that has been to backup the production database and then import it to refresh the test environment. Something that can take a while to accomplish especially with the larger CRM databases out there. Not to mention that such a refresh would also overwrite development deltas made in those environments that would need to be re-applied. Now it would seem that you can keep the test or staging environment in sync in a much more seamless fashion.

In addition to this, this tool also would seem to offer a good fail over solution for the CRM deployment. That is, by keeping a redundant CRM deployment (on site or in a remote location) that is kept in sync via the Instance Adapter, you now have the option of switching over to this redundant server as part of a disaster recovery plan. This is a feature that certain business (typically financial institutions) require (which seems particularly relevant right now as the East Coast braces for the onslaught of Hurricane Sandy).

What is not yet clear to me is how it will handle the following scenarios:

- Deltas - If an update is made in the test environment will this be overwritten? Or will it require that the mirrored environment to be "read only"?

- Frequency - How frequently the syncing occurs. Will this be configurable? Real time? Or only on demand? Such options factor into the tool being used as an effective disaster recovery option and also into requirements for bandwidth and such like.

- Two-way - Can syncs go both ways? Almost certainly not going to be the case.

I am quite sure that this tool will not have the robustness of dedicated disaster recovery products such as Double-Take (e.g. High Availability options). Or at least initially... But it definitely seems to be a tool that can be leveraged for optimizing test environments and perhaps to satisfy low to medium-end disaster recovery requirements without having to resort to a 3rd party (restoring a database is considered a very low end disaster recovery plan).

Time of course will tell as we learn more about this tool. To get a jump start on the installation and configuration of this tool you can review the detailed instructions provided in this PowerObjects blog posting.

Wednesday, October 24, 2012

CRM Relationships: Part 2

In my previous post I concluded by saying that, in my experience, Connections tends to cause a degree of consternation in terms of relationship design. This is a fairly difficult topic to approach but in this post we'll review why that is and suggest an approach for determining whether using Connections is an appropriate choice.

The main reason why Connections can be confusing is that it can - by definition - be used to relate any two entities in the system together. And therefore you always have the option of using Connections instead of a custom relationship to set up the relationship between two entities.

I think that the use of Connections often can relate to something more fundamental in terms of relationship design. For instance, Connections come into play most frequently for businesses who use CRM first and foremost as a contact management solution. And in such a scenario Connections are typically used to relate contact and account records together to record current and past associations with one another. And very often you will have many different account and contact types (company, vendor, supplier etc.) being represented within the account and contact forms. The more fundamental question in this case is whether you should be using the account and/or contact entity to represent all these incarnations or whether perhaps you should create a custom entity to represent a specific type of account. For example, if you're an investment capital firm, should a Bank be an account of type "bank" or should it be it's own separate entity?

The answer to that question (which is not really the topic of this post) will depend on various factors but ultimately should be driven by whether it's really necessary to make the Bank a type of account. Is the Bank a customer in this example? Are you likely to send marketing initiatives to the Bank? Or is it just a piece of compound information that is related to your actual customers i.e. your investors? I think the answer to the custom vs. account "flavor" decision lies within these questions.

Coming back to Connections - well more often than not once you're dealing with a custom entity then you're more likely to want to use a custom relation to define the relationship than using the Connections. Because that relationship is likely to be highly defined. Using the Bank/Investor example - if you need to record the bank that the investor uses then simply define the Bank as a lookup attribute of the Investor. There is simply no need to define that relationship via a Connection.

The above example uses a custom relationship for the sake of illustration but it is by no means limited to relationships to a custom entity. It can just as well be the case when relating an account to a contact by means of a custom relationship. The crux of the decision of whether to use a Connection should be based on how tightly the relationship is defined. I think the rule of thumb is that Connections should be used when there is not a highly defined relationship between entities.

For example, the relationship between a Contact and a Lawyer might be represented by a custom relationship if a Contact only can have a single Lawyer. If however the Contact can have multiple Lawyers and each Lawyer could be related to other Contacts in the system (N:N), it would probably be better represented as a Connection. You could of course also define a custom N:N relationship for this. The decision between these two options might be determined by the "centrality" of that relationship. That is, if a Lawyer is just one of the many "useful" client/vendor relationships that you want to store for the Contact (among say, doctors, plumbers, electricians etc.) then you could easily see that you'd have to start defining a whole lot of custom relationships for each one. This will result in your data being a lot more distributed i.e. click here to view lawyers, click there for doctors etc. (and the same is true for views, reporting etc.). However if the Lawyer is a critical piece of information that needs to be stored for your Contacts, then you probably should be separating it out into its own custom relationship.

Perhaps we can therefore summarize as follows:

Connections is not an essential part of the CRM database as in theory it can be replaced through the use of custom relationships. Therefore Connections should not be used in cases where the relationship being represented is important or definitive for your data. Separating out those relationships in terms of navigation via the use of custom relationships is probably a good thing. For other interesting, miscellaneous or viral type relationships that might be perused by a sales rep prior to heading into a meeting or for other similar less defined ad-hoc business procedures - using Connections as a general centralized repository is probably the way to go.

The main reason why Connections can be confusing is that it can - by definition - be used to relate any two entities in the system together. And therefore you always have the option of using Connections instead of a custom relationship to set up the relationship between two entities.

I think that the use of Connections often can relate to something more fundamental in terms of relationship design. For instance, Connections come into play most frequently for businesses who use CRM first and foremost as a contact management solution. And in such a scenario Connections are typically used to relate contact and account records together to record current and past associations with one another. And very often you will have many different account and contact types (company, vendor, supplier etc.) being represented within the account and contact forms. The more fundamental question in this case is whether you should be using the account and/or contact entity to represent all these incarnations or whether perhaps you should create a custom entity to represent a specific type of account. For example, if you're an investment capital firm, should a Bank be an account of type "bank" or should it be it's own separate entity?

The answer to that question (which is not really the topic of this post) will depend on various factors but ultimately should be driven by whether it's really necessary to make the Bank a type of account. Is the Bank a customer in this example? Are you likely to send marketing initiatives to the Bank? Or is it just a piece of compound information that is related to your actual customers i.e. your investors? I think the answer to the custom vs. account "flavor" decision lies within these questions.

Coming back to Connections - well more often than not once you're dealing with a custom entity then you're more likely to want to use a custom relation to define the relationship than using the Connections. Because that relationship is likely to be highly defined. Using the Bank/Investor example - if you need to record the bank that the investor uses then simply define the Bank as a lookup attribute of the Investor. There is simply no need to define that relationship via a Connection.

The above example uses a custom relationship for the sake of illustration but it is by no means limited to relationships to a custom entity. It can just as well be the case when relating an account to a contact by means of a custom relationship. The crux of the decision of whether to use a Connection should be based on how tightly the relationship is defined. I think the rule of thumb is that Connections should be used when there is not a highly defined relationship between entities.

For example, the relationship between a Contact and a Lawyer might be represented by a custom relationship if a Contact only can have a single Lawyer. If however the Contact can have multiple Lawyers and each Lawyer could be related to other Contacts in the system (N:N), it would probably be better represented as a Connection. You could of course also define a custom N:N relationship for this. The decision between these two options might be determined by the "centrality" of that relationship. That is, if a Lawyer is just one of the many "useful" client/vendor relationships that you want to store for the Contact (among say, doctors, plumbers, electricians etc.) then you could easily see that you'd have to start defining a whole lot of custom relationships for each one. This will result in your data being a lot more distributed i.e. click here to view lawyers, click there for doctors etc. (and the same is true for views, reporting etc.). However if the Lawyer is a critical piece of information that needs to be stored for your Contacts, then you probably should be separating it out into its own custom relationship.

Perhaps we can therefore summarize as follows:

Connections is not an essential part of the CRM database as in theory it can be replaced through the use of custom relationships. Therefore Connections should not be used in cases where the relationship being represented is important or definitive for your data. Separating out those relationships in terms of navigation via the use of custom relationships is probably a good thing. For other interesting, miscellaneous or viral type relationships that might be perused by a sales rep prior to heading into a meeting or for other similar less defined ad-hoc business procedures - using Connections as a general centralized repository is probably the way to go.

Tuesday, October 23, 2012

CRM Relationships: Part 1

There are many different ways to set up relationships in Dynamics CRM. The good thing about this is that you can accurately and economically model CRM relationships to mirror any real-world counterpart. The challenging thing about this is that inevitably when you have so many choices it can be confusing knowing which option you should go about choosing. And of course that decision has long term design implications so you ideally want to get it right or you'll most likely end up with a design that is not optimal. You could of course change this representation down the line but such a decision will involve data migration, modifying reports etc. etc. and therefore it is more than likely that the design you "go live" with will be a fairly long term proposition.

So the question becomes when deciding to relate 2 CRM entities - which relationship type should I use? At a high level you have the following options available:

So the question becomes when deciding to relate 2 CRM entities - which relationship type should I use? At a high level you have the following options available:

- 1-Many

- Many-1

- Many-Many

- Nested

- Relationship

- Connection

I think 1-Many and Many-1 options are in general fairly obvious and we don't need to spend much time discussing those.

The Many-Many relationship can also be obvious but I think it also can be deceptively involved. That is because there are a number of different types of many to many relationships. Without delving into the detail right now we can summarize many to many relationships into the following 3 categories:

- Classic N:N

- Proxy N:N

- Complex N:N

We'll explore the many to many relationship options in a separate blog posting. For now, we can summarize that the classic N:N relationship as it appears in the CRM entity customization should be use to model any "simple" N:N relationship i.e. where entities are related to one another without having to "explain" the nature of their relationship.

The Nested relationship is just an extension of the 1:N and N:1 relationships where an entity is related to itself. The biggest decision when it comes to nested relationships is whether it makes sense to relate an entity to itself rather than creating a custom entity to house that relationship. For example, tickets in a professional services organization that deal with break/fix type issues related to a supported product - it is often a requirement to bundle related tickets that address the same fix or release together. This can be done by designating a single parent ticket and linking all other related tickets underneath it or it can be done by creating a custom entity (call it "Ticket Group") that functions as the umbrella for grouping the related tickets. Both approaches can be valid depending on the requirement but I personally have found the need to created a nested relationship to be few and far between. Therefore as a rule of thumb I would venture to say that unless you can find a good justification not to go the way of creating another custom entity then that is what you should be doing.

Relationships and Connections are pretty much the same thing. In fact Connections came to fully replace the Relationships option in CRM 2011 so making a decision as far as Relationships go is pretty easy - do not use this option. Pretend it's not there and hide it via the security model so it doesn't confuse. If you have inherited Relationships from your 4.0 upgrade then consider migrating to Connections as part of the upgrade exercise. If that is not an option then hide Connections until you're ready to transition because having them both is just confusing.

That leaves us with Connections. I personally find that Connections (and its precursor Relationships) causes a degree of consternation in terms of relationship design. But we'll delve into that in the next blog posting.

Tuesday, October 16, 2012

Simplifying Navigation: Nav Link Tweaks

This post is a continuation of the Simplifying Navigation theme in Dynamics CRM. The most recent post regarding this topic discussed making Site Map changes to optimize the CRM Areas or "wunderbar" experience. This post is an extension of that topic i.e. making changes to the menu items in each of these areas.

When creating a custom entity, CRM by default adds the entity into a new group called "Extensions" as illustrated below.

The issues with this default configuration is that the navigation can be kind of haphazard. That is, it is quite likely that "Banks" in the example given more naturally belongs under the "Customer" grouping. Like so:

Or perhaps in one of the other groupings or even in a more aptly named custom grouping. Assuming this is the case, this default configuration makes the user have to "hunt" when navigating between the different areas. So although this is nothing more than a minor tweak it is an important one that should not be overlooked. Kind of similar to updating the widget icon that custom entities have by default to a more relevant uniquely tailored icon (as also illustrated above) i.e. the application will work fine without these small tweaks but that a conscientious solution integrator should be sure to take the time to tweak. This is the proverbial crossing of the i's and dotting of the t's - if these type tweaks are not made then it likely reflects other more fundamental design shortcuts that are being implemented elsewhere in the application due to the general lack of attention to detail. This is something akin to this post in the Harvard Business Review about grammar - the principle of which I generally subscribe to (ok - who is going to be the first person to point out the mistakes in grammar that I have made? Principle or principal? :).

This change can be implemented quite simply by exporting the Site Map and removing the SubArea from the Extensions group (the Extensions group can be removed as well).

Subsequently copy the SubArea into the Group in which you want it to appear. The order of its appearance will match the order in which it is inserted into the Site Map.

Import and publish and you should be all set.

When creating a custom entity, CRM by default adds the entity into a new group called "Extensions" as illustrated below.

The issues with this default configuration is that the navigation can be kind of haphazard. That is, it is quite likely that "Banks" in the example given more naturally belongs under the "Customer" grouping. Like so:

Or perhaps in one of the other groupings or even in a more aptly named custom grouping. Assuming this is the case, this default configuration makes the user have to "hunt" when navigating between the different areas. So although this is nothing more than a minor tweak it is an important one that should not be overlooked. Kind of similar to updating the widget icon that custom entities have by default to a more relevant uniquely tailored icon (as also illustrated above) i.e. the application will work fine without these small tweaks but that a conscientious solution integrator should be sure to take the time to tweak. This is the proverbial crossing of the i's and dotting of the t's - if these type tweaks are not made then it likely reflects other more fundamental design shortcuts that are being implemented elsewhere in the application due to the general lack of attention to detail. This is something akin to this post in the Harvard Business Review about grammar - the principle of which I generally subscribe to (ok - who is going to be the first person to point out the mistakes in grammar that I have made? Principle or principal? :).

This change can be implemented quite simply by exporting the Site Map and removing the SubArea from the Extensions group (the Extensions group can be removed as well).

Subsequently copy the SubArea into the Group in which you want it to appear. The order of its appearance will match the order in which it is inserted into the Site Map.

Import and publish and you should be all set.

Monday, October 15, 2012

Ribbon: Get View ID (Context Sensitive)

Let's say you want to execute an action that will act on the view that is currently being viewed i.e. the objective is that the action will be context sensitive to the current view. How can this be achieved?

The first thing to do is of course to add the a button to the application ribbon and you'd be well advised to use the Ribbon Workbench Tool to do this. As we are adding a button that will appear in the grid views you'll need to be sure to select the HomePage option.

As illustrated in the screenshot above, the action for the ribbon button references a JavaScript function, so you will of course need to create a JScript web resource and a custom function to match what you define for the ribbon action command.

The JScript function will then take care of launching the custom action and passing in the View ID of the view currently being viewed - which is the crux of this post. The script below provides the method for obtaining the view ID:

The result of the above exercise will be a button on the ribbon that when clicked will pop up with the ID of the current view:

You can of course tailor this jscript to pass in the retrieved View ID to whatever custom action you wish to execute.

The first thing to do is of course to add the a button to the application ribbon and you'd be well advised to use the Ribbon Workbench Tool to do this. As we are adding a button that will appear in the grid views you'll need to be sure to select the HomePage option.

As illustrated in the screenshot above, the action for the ribbon button references a JavaScript function, so you will of course need to create a JScript web resource and a custom function to match what you define for the ribbon action command.

The JScript function will then take care of launching the custom action and passing in the View ID of the view currently being viewed - which is the crux of this post. The script below provides the method for obtaining the view ID:

function GetViewID() {

try {

if (document.getElementById('crmGrid_SavedNewQuerySelector')) {

var view = document.getElementById('crmGrid_SavedNewQuerySelector');

var firstChild = view.firstChild;

var currentview = firstChild.currentview;

if (currentview) {

var viewId = currentview.valueOf();

alert(viewId);

}

}

else {

alert("No Element");

}

}

catch (e) {

alert(e.message);

}

}

The result of the above exercise will be a button on the ribbon that when clicked will pop up with the ID of the current view:

You can of course tailor this jscript to pass in the retrieved View ID to whatever custom action you wish to execute.

Friday, October 12, 2012

context.getServerUrl() not getting correct context

Whenever you need to run a web service or fetch query from within the jscript of the form you need to first obtain the server URL of your environment. Typically that is performed by running the following set of commands:

However there are a few issues with the context that this returns. For example, if I open up a contact form that has some fetch logic on the CRM server, then form opens up and renders cleanly. However opening the same contact form remotely will result in an error such as the one shown below:

The reason for this is quite simple:

When running CRM from the server, the URL does not need to include the domain name. For example: http://crm/org1. However when running from a client machine you need to specify (in certain scenarios) the full domain in order for CRM to open e.g. http://crm.acme.com/org1.

The problem with the context.getServerUrl() command is that in both of the above cases it will return http://crm/org1 (without the acme.com) which is going to be valid when running from the server but not when running from a remote client.

Although I have not confirmed I believe there will be similar issues if you're running CRM over https i.e. it will cause browser security warning popups to be issued as the command will try and execute over the http context rather than https.

The solution that we found (thanks to this post) was instead to use the following syntax in favor of the more standard formula listed above:

The result is that when running CRM using the http://crm/org1 URL, serverUrl will return http://crm/org1. And when running CRM using the more fully qualified http://crm.acme.com/org1, serverUrl will correspondingly return http://crm.acme.com/org1. Which of course is what you should expect.

And this small tweak results in the experience being the same no matter which machine CRM is accessed from.

var context = Xrm.Page.context; var serverUrl = context.getServerUrl();

However there are a few issues with the context that this returns. For example, if I open up a contact form that has some fetch logic on the CRM server, then form opens up and renders cleanly. However opening the same contact form remotely will result in an error such as the one shown below:

The reason for this is quite simple:

When running CRM from the server, the URL does not need to include the domain name. For example: http://crm/org1. However when running from a client machine you need to specify (in certain scenarios) the full domain in order for CRM to open e.g. http://crm.acme.com/org1.

The problem with the context.getServerUrl() command is that in both of the above cases it will return http://crm/org1 (without the acme.com) which is going to be valid when running from the server but not when running from a remote client.

Although I have not confirmed I believe there will be similar issues if you're running CRM over https i.e. it will cause browser security warning popups to be issued as the command will try and execute over the http context rather than https.

The solution that we found (thanks to this post) was instead to use the following syntax in favor of the more standard formula listed above:

var context = Xrm.Page.context; var serverUrl = document.location.protocol + "//" + document.location.host + "/" + context.getOrgUniqueName();

The result is that when running CRM using the http://crm/org1 URL, serverUrl will return http://crm/org1. And when running CRM using the more fully qualified http://crm.acme.com/org1, serverUrl will correspondingly return http://crm.acme.com/org1. Which of course is what you should expect.

And this small tweak results in the experience being the same no matter which machine CRM is accessed from.

Thursday, October 11, 2012

Phone Number Formatting

It is a fairly common and understandable requirement that telephone numbers be formatted when entering via the CRM front end. For example, if a user enters "5551234123" or "555-1234123" or "555-123-4123" or any other variation thereof it should be formatted into the standard format of "(555) 123-4123".

The jscript logic mentioned below can be used to this end. Specifically this logic will work as follows:

The steps to achieve this are as follows --

First, define the PhoneNumberValidation function that performs all the heavy lifting:

Then for each attribute on the form you wish to format, create an "on change" function as follows:

Where:

Finally, reference the "on change" event from the form "on save" event to prevent the form from saving unless the phone number has the correct format. For example, the following will work:

Don't forget to check the "pass execution context as first parameter" in the "on save" event or otherwise it will not work!

The jscript logic mentioned below can be used to this end. Specifically this logic will work as follows:

- Strip any non-numeric values from the phone number field

- If the phone number is less than 10 digits issue a warning

- If the phone number is equal to 10 digits, format as in the example above

- If the phone number exceeds 10 digits assume it to be an international number and do not perform any additional formatting (besides stripping non-numeric values)

- Prevent the form from being saved unless the phone numbers adheres to the above rules

The steps to achieve this are as follows --

First, define the PhoneNumberValidation function that performs all the heavy lifting:

function PhoneNumberValidation(phone,phoneDesc) {

ret = true;

var phone1 = Xrm.Page.getAttribute(phone).getValue();

var phone2 = phone1;

if (phone1 == null)

return true;

// First trim the phone number

var stripPhone = phone1.replace(/[^0-9]/g, '');

if ( stripPhone.length < 10 ) {

alert("The " + phoneDesc + " you entered must be at 10 digits. Please correct the entry.");

Xrm.Page.ui.controls.get(phone).setFocus();

ret = false;

} else {

if (stripPhone.length == 10) {

phone2 = "(" + stripPhone.substring(0,3) + ") " + stripPhone.substring(3,6) + "-" + stripPhone.substring(6,10);

} else {

phone2 = stripPhone;

}

}

Xrm.Page.getAttribute(phone).setValue(phone2);

return ret;

}

Then for each attribute on the form you wish to format, create an "on change" function as follows:

function Attribute_OnChange() {

PhoneNumberValidation("attributeName", "attributeDescription");

}

Where:

- attributeName is the name of the attribute the "on change" function is acting on e.g. telephone1

- attributeDescription is a the friendly name for the attribute (used for the warning error messages)

Finally, reference the "on change" event from the form "on save" event to prevent the form from saving unless the phone number has the correct format. For example, the following will work:

function Form_onsave(executionObj) {

var val = true;

if (!Attribute_OnChange())

val = false;

if (!val) {

executionObj.getEventArgs().preventDefault();

return false;

}

}

Don't forget to check the "pass execution context as first parameter" in the "on save" event or otherwise it will not work!

Wednesday, October 10, 2012

Reports: Excel Export Formatting

When exporting an SSRS report to Excel all kinds of interesting things can happen from a formatting point of view. The report which might otherwise render quite nicely in SSRS may appear to have been attacked by a bunch of gremlins in its exported Excel format. Several things might contribute to this haphazard effect:

- The cells of data on the spreadsheet might be scattered all over the place

- Some elements of data may occupy several excel cells having been auto-merged in the export

- The report might export into multiple different sheets within the spreadsheet with no apparent rhyme or reason

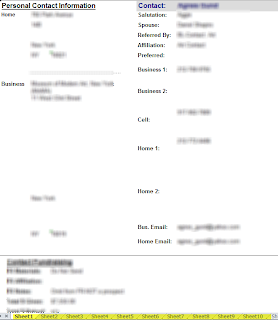

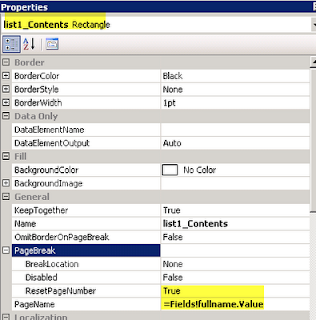

The report below illustrates this showing cells of data being spread way out and multiple sheets being created from a single export file.

The main reason for this is that Excel is a highly structured document and it needs to fit the results into neat rows and columns. If the cells or rows are not aligned then Excel makes automatic decisions in terms or rendering the export. For example, merge cells may bring 2 sets of non-aligned data elements into alignment; expanding a column width or height may similarly bring a non-aligned element into alignment with other report elements.

Therefore it goes without saying that one of the keys to ensuring that a report renders well when exporting to Excel is to ensure alignment. This might seem obvious and is fairly easy when it comes to grid controls which are fairly structured elements to begin with. However if you look at the report above, you will notice that many of the data elements are text fields and you will need to ensure that these all align quite nicely. It might be difficult to discern with the eye - for example, a location of a cell might be 2.51322 vs. 2.5 and that small discrepancy can throw things off in the export to Excel. The following guidelines should therefore be used when creating SSRS reports (in general in terms of overall quality but obviously with extra emphasis when you know the report will be exported to Excel):

- Ensure alignment - you should use the text box properties to ensure that cells or aligned exactly

- Use rectangles extensively for grouping. It is quite common for different parts of a report to have different formatting structures. Using rectangles for grouping helps excel cope with changes to the format.

- If you are using sub-reports in your main report - ensure the page size of your sub-report adheres to the size of the parent report and once again - I'd recommend using a rectangle to enclose the sub-report

- Be careful with page breaks before or after sections. When excel encounters a page break it will create a new sheet.

One other important feature to be aware of is that you can also control the name that Excel assigns when creating a new sheet. As illustrated in the screenshot above - the export created default Sheet1, Sheet2 etc. sheet names. You can refer to the following post for a detailed write up of this feature (only available in RS 2008 R2). In my case, I just entered the page details as follows:

The end result is much cleaner looking export:

Monday, September 24, 2012

A brief history of XRM

I was recently asked by a client about the difference between CRM and ERP solutions. In particular I was asked whether a particular custom solution we were trying to implement fell more neatly into a CRM or ERP framework. As the answer I provided quickly evolved into a brief history of CRM or XRM (according to yours truly), I decided to share in this post -

Generally CRM is considered to be “front office” and ERP is considered to be “back office”. And what you’ll find is that the definition of what is front office and what is back office will vary from company to company depending on their business model. You’ll similarly find that CRM and ERP products have areas of overlap where a particular function can be achieved in either system. Which system to do it will ultimately depend on where it makes sense to perform the hand off between front office and back office. If they’re both equal, then you’d typically look to the system that’s traditionally more geared towards handling the requirement. For example – take payments. Typically that’s geared to the back office because the minute you enter something like this, you’re entering a world of credit memos, taxes, recogition, collections etc. But there’ll be many cases where clients would need to handle such a requirement directly from within their CRM application.

Another good example – which is more to the point – is inventory or product management. That is more typically handled by the back office applications as inventory movement is inherently an accounting activity which ERP systems handle quite well. But again you’ll find that there are areas of overlap here too.

I think all the above though is somewhat beside the point. Because I think the XXX (Customer Requirement) that we are talking about is not a typical ERP function. In fact, I’d venture to say that it is neither an ERP function nor a CRM function.

So why are we looking at CRM for this? To answer that question you’ll have to go back to our first session where I presented it not as a "CRM" solution but rather as an "XRM" solution. CRM stands for Customer Relationship Management and grew out of the need to manage the selling process. As being able to sell is inherently linked to performance on the operations side, it was necessary for sales folk to have insight into the service delivery organization. Such as support and ticketing to understand how things were proceeding on that front (as information is power in terms of being able to anticipate and predict the customer’s overall level of satisfaction). And of course, marketing is just the flip side of sales i.e. one feeds the other and vice versa, so a CRM solution is not complete without a marketing module. This is why the classic definition of CRM is an application that covers sales, marketing and service.

But then as a CRM solution essentially became an application that deals with the “front office” as driven by the need to understand the customer’s or prospect’s overall experience, there were always going to be cases where in a particular business model, other types of information would be essential to this visibility. For example, a sales person going to a client meeting might need to be able to easily access information housed in a home grown application, medical records, or purchase history. As such – being a front office application – a CRM solution became something that needs to essentially be something that can model a business in whichever shape or form it takes i.e. CRM solutions needed to be flexible enough to be configured to model a particular business model.

At this point, a CRM solution is in effect doing a whole lot more than just Customer Relationship Management. Not only might the “customer” be a vendor, partner, reseller etc. (i.e. not the strict definition of “customer”), but also the platform became highly configurable such that it is more common than not to incorporate functionality that does not fit into even a loose definition of “customer relationship management”. Hence the term “XRM” was born which unofficially stands for “anything relationship management” attempting to reflect the fact that the platform is not just limited to “customer relationship management”. Of course even the “R” in XRM might neither be relevant based on the above description since we don’t need to necessarily even be dealing with a “relationship”. But as far as I know, from a naming standard convention, we haven’t yet evolved beyond “XRM”.

In contradistinction to the above, ERP solutions are typically (at least at this point in time) more standardized than CRM solutions. This is because the “back office” tends to be more standardized from business to business than the front office. To be sure, back office systems have deep and complex functionality but in my humble opinion (from a non-accountant) standard accounting practices have in principle not changed all that much since the double entry system was first invented (perhaps a little over-simplified) and therefore companies are more likely to be modifying their business practices to model the “best practice” of the ERP solution they are implementing than the other way round. Whether the last statement is true or not, I think you’ll definitely find that CRM solutions are much more flexible solutions than their older ERP counterpart due to the nature of the business problem they are trying to solve.

In summary, although I don’t think the XXX requirement is either a classic CRM or ERP type application, I do believe that it is a great candidate for the XRM model. And we definitely require the flexibility of the XRM platform in order to accommodate the requirements.

Friday, September 14, 2012

CRM Outlook Form and Display Rules

There seems to be an issue with the Outlook CRM create form as it relates to the Display Rule for ribbon buttons. More specifically - and by way of example - you can add a custom button to the ribbon and set it up with a Display Rule to control when the button should show. For instance, you can set up a FormStateRule where State = Existing and this will tell the application that the custom button should only show on the edit (update) form and not the new (create) form.

So where's the issue?

Well in addition to the Display Rule, you can also define an Enable Rule which controls whether the button should appear in an enabled or disabled (i.e. greyed out) state. The Display Rule trumps the Enable Rule i.e. if the Display Rule returns a "Do not display" result, then there's no point in executing the Enable Rule to determine how to display it. Which of course makes sense and is how it should work.

The good news is that this is precisely the behavior if you open up the create form using Internet Explorer navigation. Yay!

The bad news is that it seems that the same behavior is not exhibited if you open up the create form using Outlook navigation. And just to make sure you know what I mean by "Outlook navigation" below is a screenshot to help clarify this:

Versus the same form opened using Internet Explorer:

The issue of course is that in the case of the Outlook Create Form it will execute the Enable Rule function whereas in the IE Create Form it will not. And the result might be an error in the Outlook Create Form as the function may not be relevant to such a form state.

Fortunately there is a work around until Microsoft fixes this issue (which I haven't made them aware of as the workaround is quite effective and not too onerous). Simply add a condition to your custom jscript function to limit it to working with update form as highlighted in the example below.

So where's the issue?

Well in addition to the Display Rule, you can also define an Enable Rule which controls whether the button should appear in an enabled or disabled (i.e. greyed out) state. The Display Rule trumps the Enable Rule i.e. if the Display Rule returns a "Do not display" result, then there's no point in executing the Enable Rule to determine how to display it. Which of course makes sense and is how it should work.

The good news is that this is precisely the behavior if you open up the create form using Internet Explorer navigation. Yay!

The bad news is that it seems that the same behavior is not exhibited if you open up the create form using Outlook navigation. And just to make sure you know what I mean by "Outlook navigation" below is a screenshot to help clarify this:

|

| Outlook Create Form - note the icon at top left |

Versus the same form opened using Internet Explorer:

|

| IE Create Form - note IE container |

The issue of course is that in the case of the Outlook Create Form it will execute the Enable Rule function whereas in the IE Create Form it will not. And the result might be an error in the Outlook Create Form as the function may not be relevant to such a form state.

Fortunately there is a work around until Microsoft fixes this issue (which I haven't made them aware of as the workaround is quite effective and not too onerous). Simply add a condition to your custom jscript function to limit it to working with update form as highlighted in the example below.

function CustomButtonEnableRule() {

if (Xrm.Page.ui.getFormType() == 2) {

// function logic

} else {

return false

}